Employees who are using Microsoft 365 Copilot to transform the way they work now have a new tool to help them even more—the agent.

At Microsoft, we’re deploying a spectrum of agents to fulfill different needs, from acting as knowledge sources for our individual employees, to helpers that handle specific tasks for our teams, organizations, and for the full company.

Of the different kinds of agents, the easiest to implement are retrieval agents, which employees can build using Microsoft Copilot Studio agent builder or SharePoint. After a few quick steps, the agents they create retrieve information for them from data grounded in our Microsoft 365 tenant, like a SharePoint library or collection of libraries, reason over it, summarize it, and answer their questions.

As one of the first enterprise IT organizations to deploy this capability to our employees, we’re starting to see their impact first-hand, and along the way, we’re learning lessons that our customers can use to unlock their own agentic abilities.

Copilot + retrieval agents: A new way to drive enterprise AI value

So, what are retrieval agents?

First, it’s important to understand where these Microsoft 365 Copilot extensions fit within the emerging agentic environment.

Copilot agents expand Copilot’s knowledge and skills, and they can even operate autonomously to complete tasks or automate processes. Retrieval agents operate at the simplest end of the agentic spectrum and are the easiest for employees to create.

Types of agents

“Retrieval agents wrap around knowledge sources and data sets, and they include system prompts so they behave the way their creators want,” says Aisha Hasan, Power Platform and Copilot Studio product manager for Microsoft Digital. “They’re AI helpers that our employees can create to find what they want without having to search around manually.”

A retrieval agent is essentially Copilot, plus its creator’s instructions, plus grounding in a particular data set. These extensions can accomplish a wide variety of jobs, from acting as an event planning assistant to sourcing insights into business optimizations to surfacing internal guidance around leadership best practices.

“If we think of Copilot as the UI for AI, retrieval agents are a further layer on that UI that access and reason over their organization’s data,” says Mykhailo Sydorchuk, Customer Zero lead for Microsoft 365 integrated apps at Microsoft Digital. “They can also address other data sets and systems using Copilot, without the need to build custom connectors or orchestration.”

At Microsoft, retrieval agents are accelerating our AI journey by enabling employees to tailor Copilot’s capabilities to their own work and specific knowledge sources. Their value comes from creating micro-experiences that meet specialized needs to enhance productivity and information discoverability.

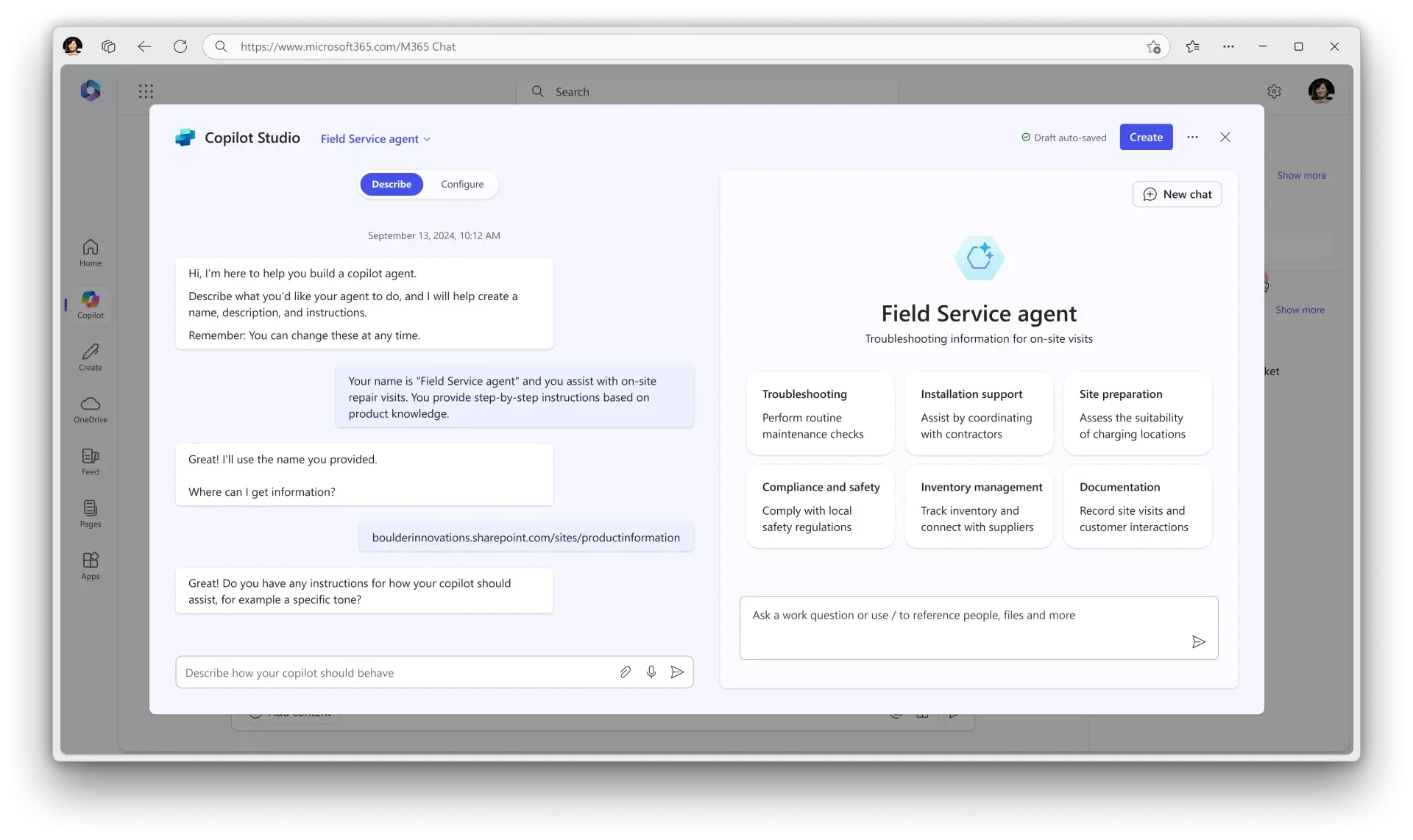

Creating retrieval agents couldn’t be easier. One option is through Microsoft Copilot Studio agent builder, accessible through Copilot Chat within Microsoft Teams. Employees can use natural language prompts and a simplified configuration process to provide custom instructions, tell their agents how to behave, and provide specific data and knowledge sources.

SharePoint agents are another opportunity to add AI assistance into everyday work. These enable users to turn SharePoint sites and documents into scoped agents that are subject matter experts for your business needs. Site owners or admins simply customize their SharePoint agent’s branding and purpose, specify the sites, pages, and files it should get information from, and define customized prompts tailored to its purpose and scope.

“We’re targeting our core enterprise professional developer scenarios with more advanced tooling,” says Amy Rosenkranz, principal product manager for Customer Zero Extensibility in Microsoft Digital. “But with Copilot Studio agent builder and retrieval agents, we’re empowering our employee citizen developers to experiment freely and create agents easily, then share them out, all surrounded by the right governance and management process.”

Enabling retrieval agents while ensuring our organization’s integrity

While agents represent a leap forward in AI-powered productivity, capturing that value means balancing the freedom to explore with the need to protect our company.

Microsoft is one of the first and largest organizations to extend Microsoft 365 Copilot by enabling agents. As a result, our team here in Microsoft Digital, the company’s IT organization, has been hard at work ensuring those agents don’t put the company at risk.

The level of risk an agent presents largely depends on its access to data sources and the actions it can take. More advanced task and autonomous agents need to cross Microsoft 365 tenant boundaries to enable actions. But retrieval agents are much simpler.

Retrieval agents typically only access data within tenant boundaries through graph connectors. Although they occasionally need to connect with information outside the tenant, they only retrieve data and don’t transmit it externally. As a result, administrating and governing these agents is much simpler.

“The beauty of retrieval agents is that, for the most part, they’re grounded in Microsoft 365 data, so they provide a single-pane view within Teams instead of forcing users to go from one source to another to seek out information,” Hasan says. “Whatever your window of productivity might be, you can interact with the information you need without constantly switching context.”

We started small, experimenting with retrieval agents with trusted stakeholders and reviewing each one to ensure they didn’t present unacceptable risks to the company. Through what we learned during that process and the data safety controls we maintain across our tenant, we’ve minimized the scenarios where agents require reviews, which only come into play for more complex agents that build on bespoke graph connectors, API plugins, or custom orchestration to access external knowledge sources and take actions.

Our confidence in retrieval agents’ safety comes from a few key factors.

Administration and configuration

Retrieval agents’ simplicity also helps us keep the risk of data overexposure low. Unlike more complex agents that require security assessments, threat modeling, privacy assessments, and Responsible AI reviews, we’re able to define our policies for retrieval agents at the agent builder environment level.

We empower tenant administrators and our partners on the Microsoft Security team to apply data loss prevention policies that configure what individual employees can enable for their retrieval agents. At this level, everyone in the company has the same configuration and tools available, and automation largely handles agent reviews and assessments. We based these pre-configured settings on the same security, privacy, and regulatory compliance standards we apply to any internally built application.

Approved graph connectors

Graph connectors increase the discoverability of external data by integrating it into an agent’s grounding. At Microsoft, we’ve onboarded a series of approved connectors that creators can use to incorporate additional data for their agents to reason over. They include connectors for external websites as well as tools like Azure DevOps and ServiceNow.

Our criteria and review process for connectors ensure that agents don’t put our tenant at risk. As long as a connector is approved, employees are free to use it to create their agents.

Ensuring Responsible AI standards at the platform layer

Microsoft has been at the forefront of establishing Responsible AI principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. To ensure we enabled retrieval agents that would respect Responsible AI standards, we needed to translate those concepts into concrete policies we could apply at the platform level.

Microsoft’s Office of Responsible AI has been an indispensable resource during this process. They maintain a comprehensive and evolving list of policy statements around restricted uses for AI capabilities. Those include things like using AI to infer emotions or personal characteristics, assess employee performance, or social scoring.

As our implementation of retrieval agents matured, we instituted controls at the platform layer to block these restricted uses for AI, identifying what kinds of information an agent can retrieve. Now, Copilot Studio agent builder knows how to evaluate responsibility against a wide array of parameters and make determinations based on the parameters we’ve set out.

For example, if a manager attempted to create a retrieval agent that would assess employee performance based on meeting attendance, guardrails at the platform layer would curtail that ability. Naturally, as we develop our policies around responsible AI further, the parameters of Responsible AI will shift and grow, and we’ll continue to nuance our configurations.

Thanks to these foundations, we’re now at the point where we feel comfortable giving every Microsoft employee access to Microsoft Copilot Studio agent builder and the freedom to create retrieval agents. It’s all part of our principle of employee self-service with guardrails.

“It’s a constant evaluation,” says Hasan. “Our goal is to allow as much freedom as we can with retrieval agents so employees can increase productivity without going down the path of greater customization that requires more intensive review.”

Different organizations are at different stages of their AI maturity journey. As you experiment with Copilot extensibility, it will be important to define your organization’s level of experience implementing AI tools, your employees’ state of readiness and training, key risk areas, and sensitive scenarios.

From there, you’ll be able to use out-of-the-box configuration capabilities in Copilot Studio agent builder to establish guardrails that work for you. It will take careful collaboration across security, privacy, legal, and IT teams, but we’re already learning that the benefits are worth the effort.

Ease and access drive creativity and new ways to work

Now that we’ve empowered our employees to build retrieval agents organization-wide, examples of creativity and innovation are popping up all over the company. Ease of use and freedom have a lot to do with this proliferation.

Using Copilot Studio agent builder

“Users who want to build agents with no code can select from premade templates using natural language, or they can fill out a few fields,” says Brian Moran, senior product manager on the Employee Experiences team at Microsoft Digital. “They can get their agents up and running in minutes.”

Creative examples of the ways that employees and teams are using retrieval agents include:

- IDEAS Copilot democratizes access to our Insights, Data, Engineering, Analytics, AI, and Systems (IDEAS) knowledge base to help users act on crucial usage information without the need for technical expertise. The agent fully integrates with Microsoft Teams, so employees can dig into data across sales, marketing, finance, operations, and more using natural language queries in their familiar working environment.

- Security Comms Agent helps our communications team create blog posts by providing a prompt that includes the content’s purpose and context. It accesses internal documents about business objectives, positioning frameworks, voice guidelines, and our Microsoft Digital communications and marketing plan, as well as the internet and specific Microsoft-owned learning sites for added context. From there, the agent creates a first draft that aligns with our Microsoft Digital positioning, objectives, and voice.

- Know Your Customer leverages AI to provide a comprehensive view of customer profiles. It accesses an overview of a customer’s tenant, usage metrics for Copilot, service incident reports, and more to provide usage statistics and health data for Microsoft 365 apps, email, meetings, Microsoft Viva, and other products to enhance customer engagement and support. The agent can even generate a tenant-specific Microsoft PowerPoint dossier for ease of use.

- Prompt Buddy Agent helps employees discover ready-to-use prompts that eliminate the need for experimentation and prompt engineering. Employees use natural language queries to discover AI prompts their colleagues have shared across industries, roles, personas, and topics, all without leaving Copilot Chat. As a result, they can save valuable time by streamlining AI-assisted workflows.

- Communications Plan Assistant accesses a library of prompts our Microsoft Viva communications team has developed to quickly draft content. The team communicates with the agent conversationally, providing feedback and selecting from the options it provides, then populates pre-defined sections in their communications plan template. At the end of the interaction, they can request a summary with all the final content that will go into the plan.

“By trusting our employees to imagine and create their own extensions for Microsoft 365 Copilot, we’re making it possible to personalize enterprise AI like never before,” says Nathalie D’Hers, corporate vice president of Microsoft Digital. “Empowering our people to create retrieval agents in a responsible environment is the ideal combination of human creativity and AI capabilities, and we’re confident it will unlock a new era of innovation.”

Here are some tips for getting started with retrieval agents at your company:

- Establish early communication and collaboration with members of your security, legal, compliance, IT, and any other teams who can help you define ways to configure Copilot Studio agent builder safely.

- Agents rely on data, so ensure your enterprise data is clean, well-governed, and accessible through scalable pipelines.

- Start slowly. Enable retrieval agents for smaller, select groups to work through any configuration issues or concerns before widening access. Plan to review everything you do at each step, and use those learnings as a basis for configuration and automation as time progresses.

- Balance employee empowerment with organizational safety. That balance will evolve as your organization’s AI maturity progresses.

- Use simple retrieval agents as a springboard to more complex extensions that require a structured review process.

Want to explore the possibilities for creating agents with Microsoft Copilot Studio? Try it free here.

- Witness AI-powered agents in action so see how we’re embracing this new ‘agentic’ moment at Microsoft.

- Learn how we’re finding deeper AI value at Microsoft with Microsoft 365 Copilot extensibility.

- Find out how we’re unlocking enterprise AI extensibility at Microsoft with Microsoft Copilot Studio.

- Check out how we’re empowering our employees with the Microsoft Power Platform.